Tests passes. App still not working for the end user.

Does this sound familiar? An app was developed, tested, and made available in production. And then: the users complain that the app is not working. The app however passes all tests. How can this be? And how can this be solved?

Testing is like the holy grail in software development. Having written code at least once that has full testing and code coverage is the crusade many developers are committing themselves on. To achieve their goal, they go on a quest with unknown outcome, searching for the best way of writing testable code, led by myths and legends about code that has every possible scenario tested. Yet only few find the holy grail. Testing is not only a spiritual quest. It is also a unicorn. Junior developers believe in it. Until one day they realize there is no fairy dust, no magic, that it might be a myth. The day when they turn into senior developers. Although … this is not true for all in software development.

There is many, many code out there that comes with 100% coverage and test scenarios that cover all use cases. 100% code coverage implies: everything known that can happen, is tested. When all possible usage scenarios are tested, all code should be tested. It is not hard to argue that it is possible or a must to have 100% code coverage. Libraries are the perfect use case for 100% coverage: they provide their functionality via a public API to an any app that consumes it. This makes a high code coverage mandatory, but also “easy” for libraries to achieve this. If an API with input A should return B, this is what needs to be tested. Unfortunately, the world isn’t always so simple. Simple rule: the closer you move to the end user, the harder it gets. That’s not only because with the end user the final boss enters the stage. The number of testing variants increases drastically: devices, access, languages, roles, etc. Let’s not discuss too much about general testing. The main focus here is of course SAP. How is testing with Fiori apps?

Fiori Apps

Fiori / UI5 apps are not an exception regarding the complexity of testing web app. For Fiori apps it can get even more complex: the UI5 version is mainly defined by the Fiori Launchpad, a custom theme can be used, spaces and pages activated, local or cloud FLP, integrated into a complex process, etc. The support cycle of apps is different than the one of the launchpad they are integrated into. There are many parameters that can change and that are even out of control of the app developer.

On top of this, testing an UI5 until a few years ago was an overly complex task. The included mock server works more or less for OData v2, but not with v4. Blanket.js was used to measure code coverage, at a time when the project was already struggling with problems, like: using arrow functions resulted in an error. Sinon.js makes code testable (fakes, stubs, spies), yet raising sometimes the question if your unit tests pass because your code works, or you faked it till it worked. The included test recorder … works. OPA5 promises to make writing tests “very easy”. The OPA5 code can get extensive and when your OData service is v4 based, a problem might be the included mock server.

Over the last years, progress was made. The biggest leap forward for sure is wdi5. Wdi5 brings writing tests for UI5 to the next level by using a widely used framework: wdio. This is a step into the right direction: less of doing all internally, more: use what is available or integrate with it. For code coverage, the UI5 code coverage middleware uses Istanbul, another widely used tool. The SAP Fiori Tools offer more tools like the Fiori Launchpad preview or serve static. The best you get from SAP UX is without doubt the mockserver. This mock server works with OData v2 and v4.

Does this now make testing UI5 apps easy? No.

Why? Testing is never easy. Testing is hard work. Test scenarios, test data and tests must be created, written and validated. A whole team must work together to make testing work. Continuously. Even when the app is done and live, tests continue. After all, one goal is to know if an app fails after some change was done in the production landscape. But is testing now only complicated because it is a continuous task? And when all know the benefit of testing and the work that comes with it, why do apps still fail in production? Why do apps not work for the end user? And why do apps fail for the end user while the tests passes?

Note: Apps are always tested

An important testing fact: apps are tested. When the company / customer has a certain size, chances are they have a testing team and tools in place that (should) ensure that apps are tested. This might be a test scenario in SolMan that is executed from time to time. Probably manually by someone that simply goes through a step-by-step documentation. Or some high level smoke tests. These tests might even be unknown to the developer of app. In case a developer states: the app is not tested, there is a good chance this is wrong. Maybe there are no tests provided by the developer. But this does not mean the app is not tested at all. Not to forget: apps are constantly tested by the end user. Each time they work with the app.

End user vs. developers

The testing tools mentioned above for UI5 are made by developers, for developers. It doesn’t matter if it is configuring the mock server, writing a unit test or integration test. The target group for these tests is developers. This is the reason why testing is part of the UI5 SDK documentation. Knowledge on how UI5 works is needed. Programming knowledge is a must for writing QUnit, OPA5 or wdi5 tests. On top knowledge of how the app is developed is needed. They allow the developer to validate the most complex requirements: is the UI controls there, what is the shown value, what are its properties.

Tests are written by a developer. While it must not be the same person that wrote the code under test, the tests are still written by a developer (in most cases: yes, it is the same person). While the journeys, the flow through the app, can be defined by the end user, it is the task of a developer to translate these into code. The translation of: “I open the page, select an entry, I see the detail page” to code is up to the developer. The person that wrote the app is writing the tests that validates the correct functionality of the app. I hope you see the problem here. And not only because it is the same person: the tests are written in code. Most end users (which should be also the testers) have problems reading and understanding code. Yet, it must be the end user that defines and validates the tests. But they are mostly out of this as code is not the language they speak.

With the developer writing the app and the tests, it is easy and common to tune the tests. The developer can write QUnit tests to test the formatter logic and all tests passes. Even when there are 4 formatter functions and 60 unit tests, who validates that these test real world scenarios? Or that these are updated to reflect the latest changes?

The test result page for QUnit tests looks like this (example: Shopping Cart).

How many of your end users / testers can say that these tests are valid? These are tests for the developer, and therefore there is a certain risk that these tests are not completely trustworthy. In the first years of Fiori apps, it wasn’t uncommon the get apps that passed the unit test quality gate by including tests that checked if 1 === 1.

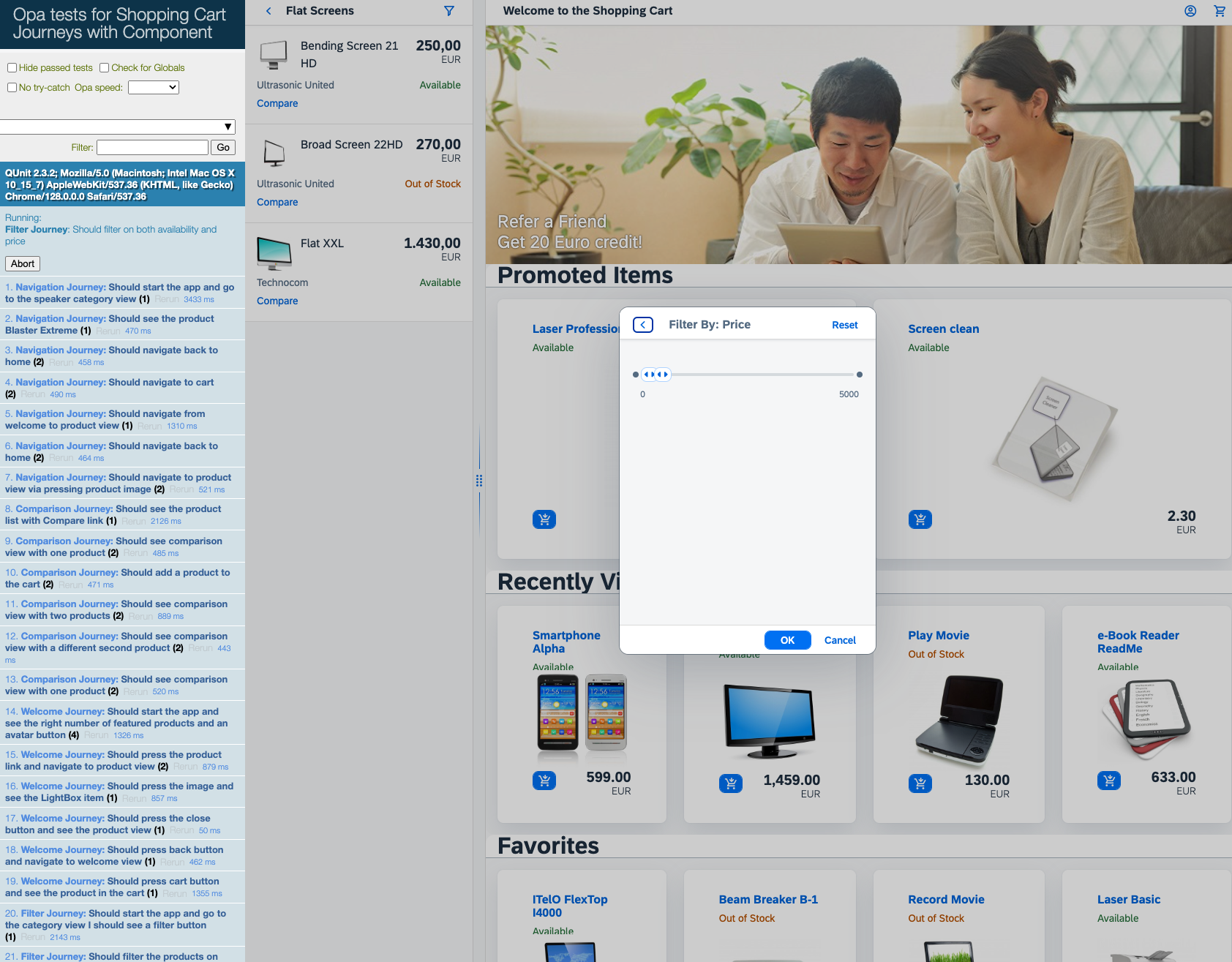

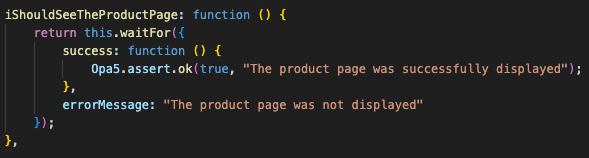

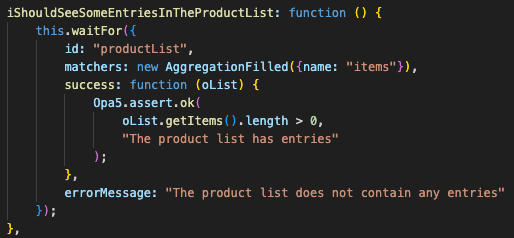

Testing the UI makes it easier to check what the developer is testing. Running e.g. OPA5 in the browser shows visually what the test is doing.

While it is good the see what is done, being able to follow the actions and see how the app changes, it is still hard to find out what was tested. The test result looks like for the unit tests.

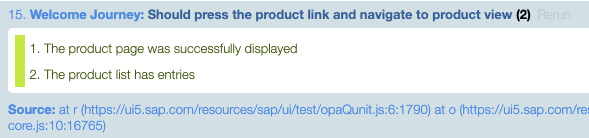

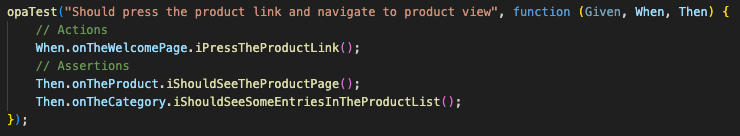

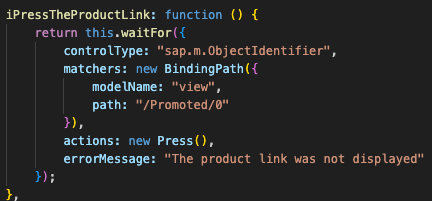

Raise your hand if you think that your end / key users can read this report and know what was done to pass the test. In case they want to find out what was tested, this is the journey:

The functions called to run the test are distributed over 3 files:

As said: OPA5 is for the developer. If the UI changes, do you feel comfortable enough to state: this will be catched by the OPA5 tests? Or that the testers can spot missing or not updated tests?

End user view

Even when your testing team and culture is top notch. Not everything that makes an app break in production is easily findable with unit, integration or system tests. Sometimes apps are broken in production for errors that are not errors for the tests. An icon is missing, data in row 5 is shifted, the detail page looks odd, button is hidden behind a “show more” icon. Why? An erroneous / updated theme that causes the browser to render a UI control differently. Dummy test data for texts and in production the values do not fit. Elements are hidden on smart phone, while the OPA5 test was done using desktop resolution only.

Short: the app is tested. Yet the app is not working for the end user. A developer is not the end user. And this is one of the main reasons why apps fail in production. This problem cannot be solved. But the gap can be a least partially closed.

Closing the gap

The trick is to include the users / testers early on.

Spoiler

you won’t get away from writing tests. QUnit and wdi5 tests is what should be provided. For UI controls: these are a must have.

How can you include the user early in the tests? First, early does not mean as early as when the first line of code is written, or earlier. It means: as soon as tests for the user can be written. And how to ensure that the user is willing to be actively involved?

By delivering information they can not only understand, but they know and can influence.

Provide useful mock data

Try to get as soon as possible mock data from QA or the testing system. Auto generated mock data like “Product A, Product B” or “Lorem ipsum dolor sit amet” is nice. When the user however is used to work with “SAX31P0” and “SAX31P03 Operating voltage AC 230 V, 3-position positioning signal” as description, this is what the app must show during tests. Using dummy texts makes it easy for users / testers to keep distance to the test results. It causes a “this is not ready, this is still under heavy development” feeling that prevents the users to commit to the tests. Using QA data early on comes with a nice side effect: in case the production texts contain characters like √ that might look “strange” in the presentation, this is caught early on.

Let the user record

To get the users involved early, provide them with the current version of the app and let them record the user journey actions. Instead of having the developer translating the user journey into code, let the user do this. It is their recorded journey that is used in the tests. Make them own it. Another side effect: the user is testing the UI while it is developed and can already provide input regarding the UX or other expectations.

Visual testing

Validate with visual tests that the UI is working. That the text fits, that the icons are visible, that the layout works across device types. Instead of testing if a given ID exists in the HTML and trigger actions on it, record the UI as a whole. Capture the app in all supported devices allows to identify possible UX problems. Visual testing is possible e.g. using wdio (it can also take screenshots). The UI tests should be done with a tool that allows to create screenshots easily and report them against a baseline. Running visual test in production helps identifying problems caused by updates. Baselines help to identify when an UI change was introduced.

Use the test

While this might sound obvious, this is not about using the tests only for the early tests. As other tests should be added over time (e.g. wdi5), these should be based on what is already captured. When the developer starts writing all the detailed tests, ensure these are based on what was already recorded by the user. The QUnit, OPA or wdi5 tests are to be understood as a refinement. The fact that the user is providing the recording of a user journey implies that they are also the owner of it. This ownership is sacred and must be fostered. The tests can be used to clean up code: what is not tested, is dead code. Remove it. If you later find out this code was necessary, than the test scenarios are not complete. Guess who is responsible for these? Right. Not the developer.

Next steps

For each of the three recommended approaches I’ll write a blog post and share coding. The tools used are

- SAP UX mock server and proxy

- Chrome recorder

- Puppeteer and BackstopJS

0 Comments